Generative AI is now infrastructure. What distinguishes a slick demo from a dependable capability is governance: a repeatable way to decide what data the system may use, which models are allowed, what guardrails apply at runtime, and how quality, risk, and cost are measured and improved over time. Governance done well accelerates delivery; decisions are codified, reviewable, and auditable instead of improvised on Slack.

- Governance is an operating model, not a committee. Policies, tests, gates, and rollback live in the regular product lifecycle.

- Map risks to testable controls. Content, data, security, and operations each get specific checks (retrieval bounds, redaction, allow-lists, budgets, SLOs).

- Data with provenance. Ingest-time PII/PHI redaction, sensitivity labels, per-tenant indexes, and source/licensing metadata.

Why governance now

Two forces converged in 2025. First, GenAI moved into core flows—customer service, knowledge retrieval, marketing operations, analytics—and with that came real exposure: cost overruns, sensitive data leakage, and brand-risk from bad outputs. Second, the regulatory and standards landscape matured (EU-style safety and transparency requirements, NIST-style risk frameworks, and ISO/IEC management systems for AI). You don’t need a new bureaucracy; you need an operating model that makes risk controls part of product delivery and produces evidence by default.

A practical risk taxonomy

Think in four classes, each mapped to controls you can actually test.

- Content risk: hallucinations, unsafe or biased output, ungrounded claims. Controls: retrieval constraints and cite-or-fail patterns; calibrated prompts; output moderation and citation validation; human-in-the-loop for high-impact actions.

- Data risk: PII/PHI exposure, trade secrets, copyright/licensing problems, cross-tenant bleed. Controls: ingest-time redaction and labeling, per-tenant vector stores, strict data provenance, purpose binding.

- Security risk: prompt injection, tool abuse, indirect exfiltration via retrieval or web calls. Controls: input sanitization, domain allow-lists, tool/function allow-lists with schemas, output sandboxes.

- Operational risk: unbounded latency and cost, degradation/drift, vendor outages. Controls: routing with fallbacks, token and budget limits, caching and batching, SLOs for latency/cost/error, canary + rollback.

Governance is simply the habit of attaching these controls to each use case, then proving they work with tests and traces.

From model-centric to system-centric

Many teams keep swapping models to chase quality. That’s expensive and rarely fixes the root cause. Production systems win by design choices around retrieval, tool use, and workflow boundaries:

- Treat the LLM as a reasoning and formatting engine; keep facts in a retrieval layer with recency windows, metadata filters, and per-tenant indices.

- Prefer workflows over unconstrained agents. If you must use agents, freeze the tool list and add budget/time caps, then measure success by the same product KPIs.

- Keep prompts small, versioned, and parameterized. A long prompt is a policy you can’t audit.

Data supply chain and provenance

Your model can only be as trustworthy as its inputs. Build a data supply chain that records source, license, timestamp, tenant, and sensitivity for every document or event. Index only what the use case needs; stale or unknown origin data is the fastest way to hallucinations and legal exposure. Redact PII/PHI at ingest, not at inference; labels and retention policies must travel with the document through embedding, indexing, and caching.

Model strategy and routing

Stop thinking “one model to rule them all.” Think tiers. A small, fast model handles 70–90% of calls; harder questions escalate to a larger one only when a router detects uncertainty or policy triggers (e.g., missing citations). Internal models are great for predictable workloads and cost control; hosted APIs are great for spiky traffic and niche capabilities. Governance decides when each tier is permissible and how requests are logged across tiers.

Evaluation that survives contact with reality

Offline benchmarks are not enough. Build evaluation sets that mirror real tasks: grounded Q&A with required citations, data extraction with exact-match scoring, safety cases with red/amber lines, and adversarial prompts that test jailbreaks. Do allow an LLM-as-judge for speed, but calibrate it against human reviewers and lock the judge prompt/version. Post-deployment, sample real traffic daily, compare new candidates against the champion, and promote only on statistically sound wins across quality, harmful-output rate, latency, and cost.

Guardrails that actually work (layered)

Input filters handle content types, length, Unicode oddities, and known jailbreak signatures. Retrieval constraints keep the model inside the allowed corpus and tenant; everything must be citeable. Model-layer guardrails set temperature, max tokens, and an explicit tool allow-list; tools themselves validate schemas and reject work they can’t complete safely. Output filters catch policy violations, require citations for claims, and redact any late-stage PII. Finally, budget guards fail gracefully when token or cost limits are reached, with clear UX.

What gets measured gets managed.

Peter Drucker

Telemetry, traces, and auditability

If you can’t replay a response, you can’t defend it. Log a trace for every answer: inputs (after redaction), retrieved chunks/URIs, prompt and version, model and params, tools executed and their IO, citations, latency, tokens, unit cost, risk flags, and user feedback. Keep immutable admin/config logs. This single record powers incident response, quality analytics, A/B evaluation, and compliance reporting without manual screenshots and slide decks.

Cost, latency, and reliability—managed together, treat cost per answer and energy per 1k tokens/requests as first-class SLOs next to latency and error rate. Caching, batching, and KV caches are not “nice to have”—they are the difference between a viable product and a cost sink. Add a simple rule: a change that improves quality but doubles cost must ship with routing or caching that brings unit economics back in line. Set per-tenant budgets so one heavy user can’t degrade the whole system.

Anti-patterns to avoid

Prompt sprawl (one prompt per team, no versions), shadow indices (unknown data sources in a private vector DB), demo debt (a prototype in prod without guardrails), and safety theater (policies that don’t match traces). If a rule isn’t enforced in code or CI, it doesn’t exist.

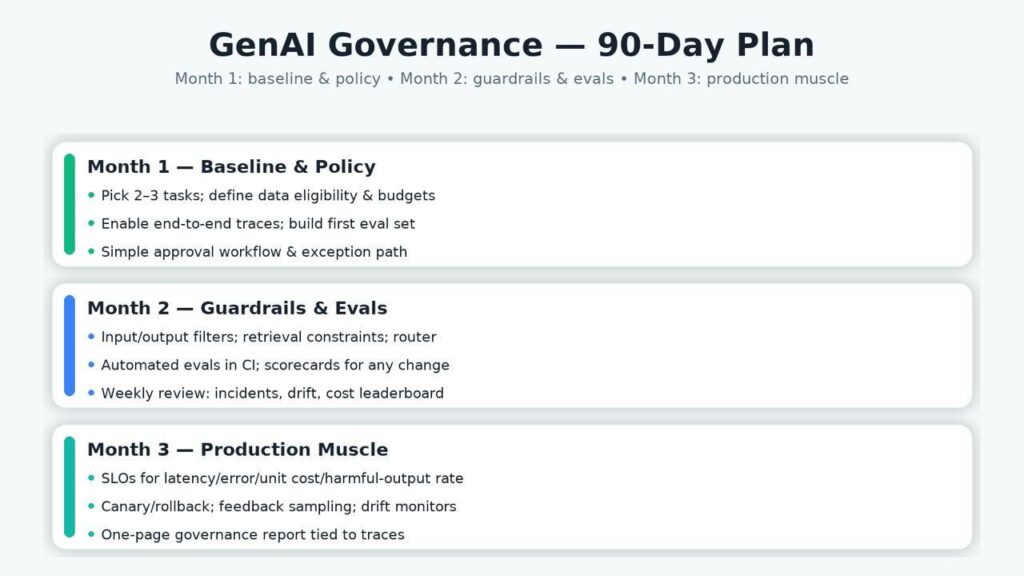

Implementation roadmap (90 days, realistic)

Month 1 — Baseline and policy. Pick two high-signal tasks. Define allowable data, PII rules, citation expectations, and hard budgets. Turn on end-to-end traces and assemble a first evaluation set from real tickets/docs.

Month 2 — Guardrails and evaluation automation. Add input/output filters, retrieval constraints, and a router with fallbacks. Wire evals into CI so any prompt/index/model change yields a scorecard. Start weekly governance reviews with incident and cost leaderboards.

Month 3 — Production muscle. Add SLOs for latency, error, cost per request, harmful-output rate. Enable canary + rollback; sample user feedback; monitor drift. Publish a one-page governance report tied to trace IDs, not slides.

Conclusion

Generative AI becomes reliable when you govern it like infrastructure and improve it like a product. Make data eligibility and budgets explicit, version prompts/models, enforce guardrails at multiple layers, and capture end-to-end traces. Follow a 90-day path (baseline → guardrails/evals → production muscle) to cut risk, stabilize cost/latency, and lift quality—without swapping models at every incident.

Neither by default. Safety comes from controls and evidence. Use both, routed by policy and economics.

You can bound them: retrieval constraints, cite-or-fail, hard eval gates, and human review on high-impact flows.

A product-minded ModelOps lead who treats prompts/models like software: versioned, tested, observable, and rollback-able.