“Vibe coding” is a pragmatic way to run software teams: design every part of the system around the developer’s ability to stay in flow and get rapid proof that a change is correct. In 2025 this is not a nice-to-have. AI assistants, ephemeral cloud previews, contract-first interfaces and feature-flag rollouts have collapsed the time between idea and impact.

Teams that treat flow as the first-class constraint ship more often, fix less after release, and retain engineers. What follows is a dense, hands-on framework—no slogans—covering definitions, architecture, workflow, metrics, rollout and risk.

Staff/Principal engineers shaping DevEx, CI/CD, and platform standards.

Platform/DevEx teams building golden paths, previews, and observability.

Product engineering teams adopting AI pair programming and flag-guarded releases.

- Flow is the constraint. Optimize for minimal context switches and fast feedback; throughput follows.

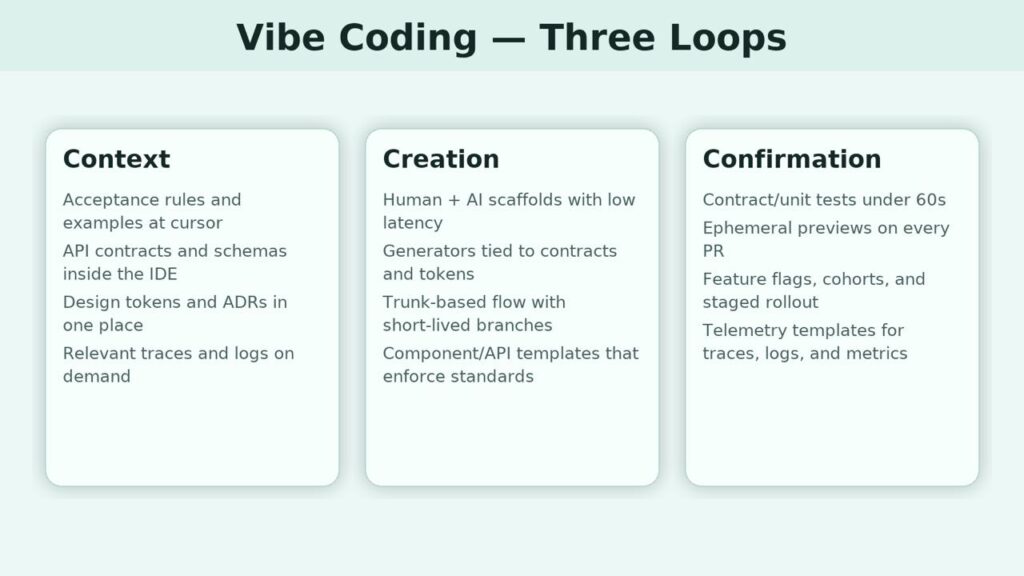

- Three loops drive everything: Context at cursor, Creation with human+AI scaffolds, Confirmation via fast tests & previews.

- Contracts first. Stable API/Schema contracts enable reliable generation, tests, and safer changes.

What Vibe Coding Means

Vibe coding optimizes three loops—context, creation, confirmation—around the engineer’s cursor. Context is everything needed to make a correct change without browsing ten systems: acceptance rules, API contracts, design tokens, past ADRs, and relevant traces/logs—delivered inside the IDE and dev portal. Creation is human+AI code production with low latency: generators tied to contracts and design systems, prompts that encode naming/error patterns, and a trunk-based workflow with very short-lived branches. Confirmation is immediate evidence: contract and unit tests under a minute, realistic preview environments on every PR, baseline observability pre-wired, and feature flags for safe exposure/rollback. If any loop stalls, the platform changes before the developer does.

Why It Matters Now

AI has moved work from boilerplate to “describe → generate → refine,” which shifts the limiter to context quality and validation speed. Cloud previews have collapsed the “works on my machine” phase; stakeholders can click a URL and see the exact change with seeded data. Product organizations measure outcomes, not output, requiring frequent, low-risk releases with telemetry. These shifts reward teams that remove wait states (queues for reviews, QA, environments) and penalize weekly merges and manual setup.

Architecture for a Vibe-Ready Codebase

Design for explicit boundaries and executable artifacts. Publish contracts first (OpenAPI/GraphQL/protobuf) so scaffolding, mocks, and tests can be generated deterministically by both humans and AI. Use design tokens consumed by web/mobile/email to keep UI changes cheap and consistent. Prefer an evented core (pub/sub) to reduce temporal coupling between capabilities. Provide golden paths—one sanctioned way to add a service, endpoint, background job, or UI card—delivered via a CLI that enforces naming, folders, telemetry hooks, contracts, and basic tests. The aim is not optionality; it’s one good default that scales.

Daily Workflow in Practice

A ticket links to acceptance rules and the contract to extend. The engineer edits the schema, runs a generator, and gets handler stubs, client methods, tests, and instrumentation. An AI assistant, primed with project prompts and examples, drafts code; the human strengthens edge cases and failure modes. Pushing the branch spins up a preview environment with realistic seed data; the PR auto-posts its URL. Reviewers interact with the feature, not just the diff, and the author attaches traces for the new request path. Release happens behind a feature flag to a small cohort with error-budget gates; observability is already present because it’s part of the scaffold. The whole loop keeps the engineer in flow and produces hard evidence quickly.

Want to unlock the secrets of vibe coding for next-level development?

Contact UsPlatform Expectations

A vibe platform is opinionated and boring to operate. The dev portal is the source of truth for services, contracts, runbooks, incident notes, design tokens, and example prompts. Semantic search spans code, schemas, ADRs, and incidents and returns snippets to the IDE. CI prioritizes speed: contract+unit suites <60s, heavier suites run asynchronously and report back. Ephemeral previews match prod topology; seed scripts make data reproducible. Feature flags are first-class with staged rollout and kill switches; no code changes required to cohort traffic. Observability templates ensure every endpoint emits traces, structured logs, and minimal metrics without manual wiring.

Metrics That Matter

Treat throughput as a by-product of flow. Track Time to First Signal (TTFS)—minutes from pushing a change to the first reliable result (tests passing or preview live). Keep it under ten minutes and resist regressions. Measure feedback latency for core suites; once it exceeds a minute, context switching explodes. Monitor flow continuity (uninterrupted ≥45-minute blocks per engineer/day) to detect process noise. For reliability, watch change failure rate and MTTR on flag-guarded releases. For team scalability, enforce onboarding-to-first-PR in hours using golden paths and generators.

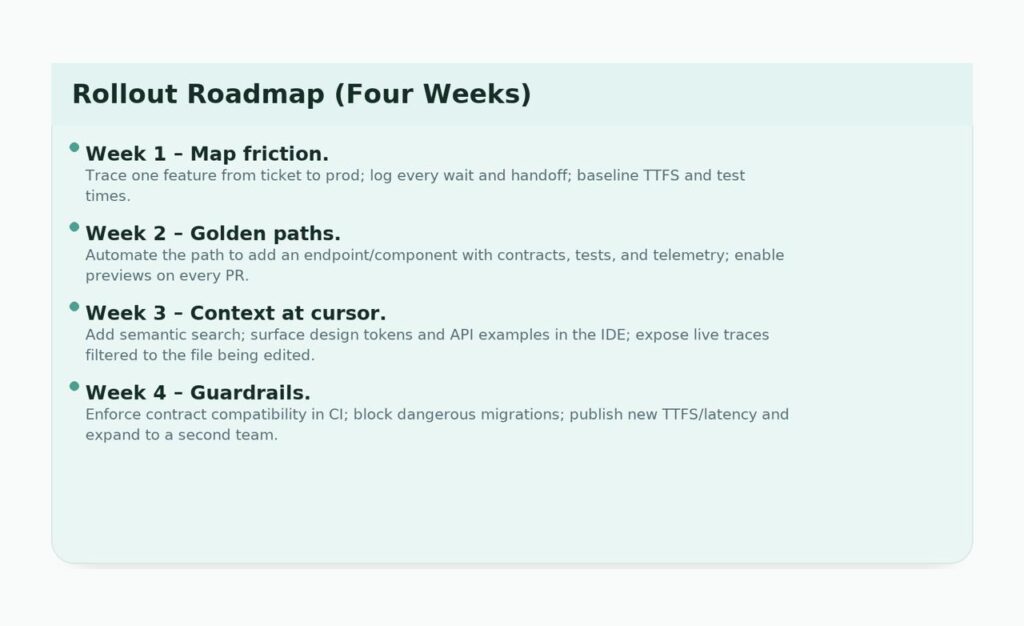

Rollout Roadmap (Four Weeks)

Week 1 – Map friction. Trace one feature from ticket to prod; log every wait and handoff; baseline TTFS and test times.

Week 2 – Golden paths. Automate the path to add an endpoint/component with contracts, tests, and telemetry; enable previews on every PR.

Week 3 – Context at cursor. Add semantic search; surface design tokens and API examples in the IDE; expose live traces filtered to the file being edited.

Week 4 – Guardrails. Enforce contract compatibility in CI; block dangerous migrations; publish new TTFS/latency and expand to a second team.

Economics and Business Impact

Queues are the hidden tax. Waiting for environments, for QA to reproduce locally, or for reviewers to compile the app adds variance and rework. When previews are automatic and contracts enforce shape, reviews focus on behavior and design, not setup. Flags plus error budgets let product run more experiments without reserving rollback time. Standardized scaffolds reduce platform support tickets; templated observability compresses time to root cause because traces look the same across services. The net effect is a shorter idea-to-impact loop and fewer late surprises.

Governance, Security, Risk

Route AI assistants through approved providers; redact sensitive payloads and log prompts for auditability. Enforce SSO, short-lived tokens, least privilege, and just-in-time elevation. Scan for secrets and PII pre-commit and in CI. Fail the build on contract breaks to protect downstream consumers. Make migrations reversible and ship non-reversible ones behind flags with explicit back-out plans. Mask personal fields at ingestion to avoid regulated-data leaks in logs.

Anti-Patterns to Avoid

Treating AI as autocomplete (instead of a collaborator with reasoning and tests) degrades quality. Long-lived branches make previews stale; prefer trunk with small merges. Monolithic test runs destroy flow; split a fast smoke layer from slower suites and refuse to regress. Manual documentation rots; generate and execute where possible. Tool sprawl fragments the experience; give one blessed way to create services, endpoints, and telemetry—and retire alternatives.

A Practical Migration from a Monolith

Start at the edges: define contracts for the top endpoints and generate clients for each consumer. Introduce previews that deploy only changed modules and gate access via feature flags so QA/product can exercise safely. Wrap logging/tracing with a tiny SDK that sets correlation IDs and standard attributes and include it in scaffolds. Move one visible surface to design tokens and a shared component library. Over six weeks, reviews get faster, bugs reproducible, and telemetry consistent—making the first domain extraction an incremental step, not a rewrite.

Speed isn’t how fast you type — it’s how fast your system tells you the truth.

Vibe Coding Playbook, 2025

Conclusion

Vibe coding is disciplined engineering around developer flow. Make context immediate, creation low-latency, and confirmation automatic; measure TTFS and feedback latency; ship behind flags; keep contracts and observability non-negotiable. The payoff is a safer, faster path from requirement to result—and a codebase that grows with the team instead of against it.

Why Ficus Technologies?

Ficus Technologies is not just talking about vibe coding — we practice it.

We design platforms, workflows, and developer experiences that keep teams in flow:

- Hands-on expertise – we’ve built golden paths, preview environments, and contract-first systems for companies that need to deliver fast without losing reliability.

- Developer-first approach – everything we create is optimized for the engineer’s cursor: context at hand, rapid feedback, and confidence in changes.

- Proven results – our clients ship more often, recover faster, and scale teams with less friction because the engineering environment works with them, not against them.

At Ficus Technologies, vibe coding is not a buzzword — it’s the way we help organizations grow resilient, high-throughput engineering cultures.

No. AI helps, but the core is feedback design—contracts, previews, and tests that prove correctness quickly.

Begin at the edges: publish contracts for top endpoints, add previews on PRs, and introduce a golden path (CLI scaffold) for one change type.

TTFS (push → first signal), core test latency, flow continuity (≥45-min focused blocks/day), change-failure rate, MTTR on flagged rollouts.

Gates shift left: contracts and fast tests remove late rework. Speed increases because waits and rollbacks shrink.